Using serverless components is an easy and cost-effective way to deploy applications. Unfortunately, keeping track of resources and troubleshooting unusual utilization can be challenging compared to traditional servers due to the lack of access to a process manager and shorter-lived resources. A common example of this is poor memory management, which can eventually lead to application crashes.

This article provides deeper insights into what memory leaks or similar effects on serverless components actually are, how they are caused, and a common but often forgotten cause of poor memory management. As an example, Google Cloud's serverless product Cloud Run is used.

A running process can allocate memory, which it can then use to store temporary data. When the process no longer needs the memory, it should release this memory. This way, it can be reused by other processes. If this memory is not released and no free memory is available for other processes, the operating system will start taking action by shutting down processes. This will most likely lead to a container restart.

Memory leaks observed in serverless or physical components are likely due to the same typical causes like circular dependencies, infinite loops, or bad coding practices. A lesser-known characteristic of serverless components, however, is its default behavior to store local data in memory, which is also the reason why you don't have to specify disk size when creating your Cloud Run instance [1]. This buildup can eventually lead to a similar effect until the container is redeployed or restarted by the process manager.

While a memory leak will inevitably lead to container restarts, it doesn't always cause immediate issues for users and may go unnoticed initially. However, these restarts and memory buildup result in extra costs for the cloud customer and can also lead to process failures due to insufficient available memory.

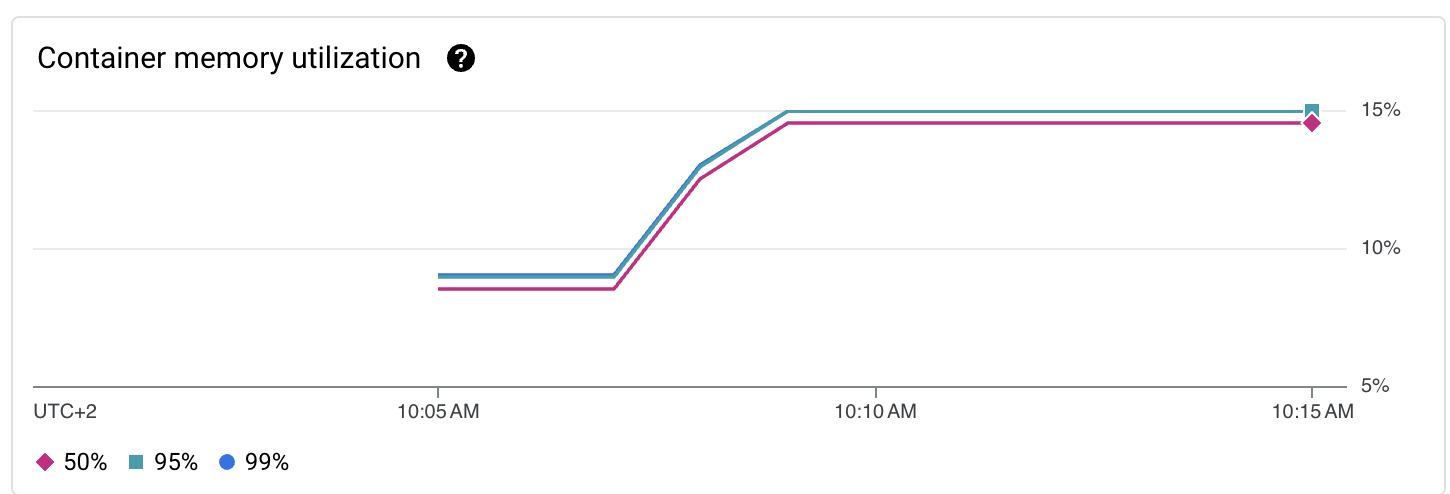

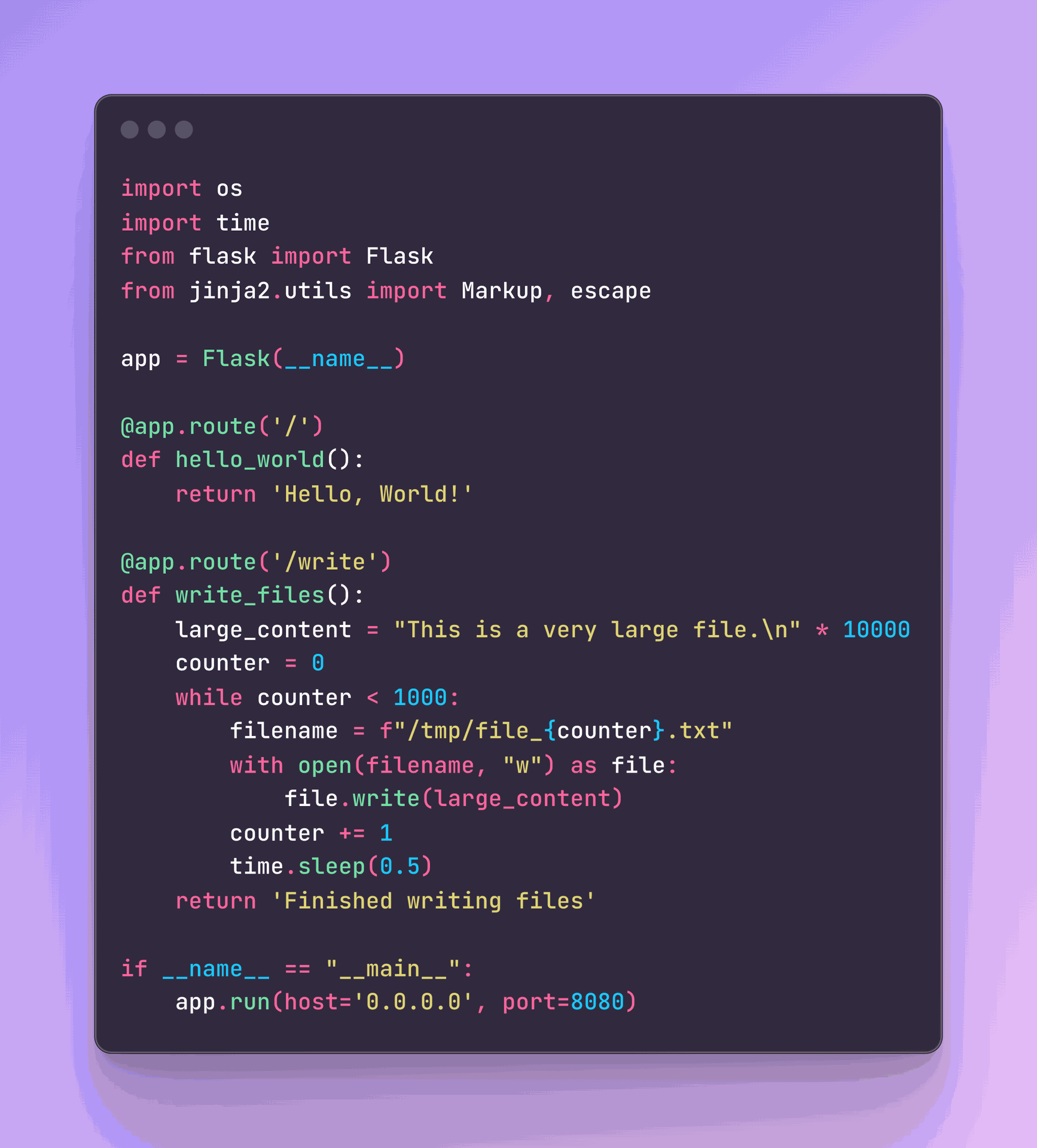

The example above shows a Cloud Run deployment (code at the end of the article) that writes files to local storage on command to demonstrate the effects of a memory leak. Initially, the memory usage is continuous. When files are written to local storage, there's a noticeable bump in memory usage. After this, without additional local file dumps, the memory usage stays on the higher amount because the files still exist.

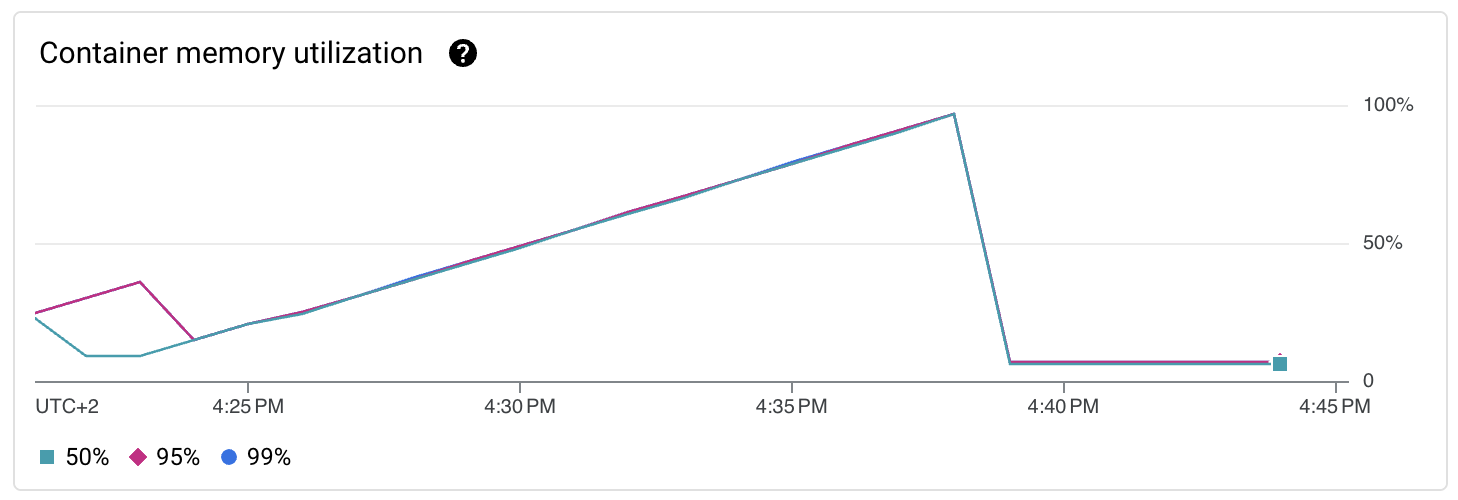

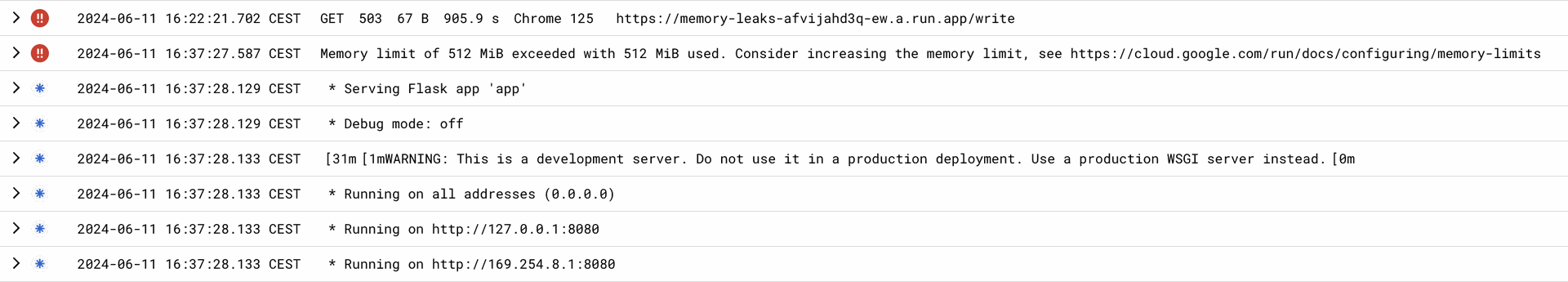

If the process of writing to local storage continued, the container would eventually hit its maximum memory limit and restart, repeating the cycle, as shown below.

A common issue, and why I showed the above example, is writing large amounts of log files to local storage instead of stdout, stderr, or external storage devices like NFS or a cloud storage bucket [2][3]. This practice, while acceptable on a traditional server, can lead to memory management issues, container restarts and increased costs in a cloud environment like Cloud Run.

Therefore, understanding this behavior is crucial for effectively managing and optimizing your Cloud Run deployments. By being aware of how poor container management can impact your cloud costs and system reliability, you can take steps to mitigate these issues and ensure smoother operation of your services.

Key takeaways:

Memory management in serverless environments has it’s own peculiarities.

If it looks like a memory leak and it behaves like a memory leak in serverless environments, it could very well be something else.

Mounting external volumes is the way forward to avoid the above scenarios from happening.

Example code:

[1] https://shorturl.at/RMinI

[2] https://cloud.google.com/run/docs/configuring/services/nfs-volume-mounts

[3] https://cloud.google.com/blog/products/serverless/introducing-cloud-run-volume-mounts