When building an application, the framework of choice is often based on previous experiences and the components already built-in. This is perfect for a classic hosting setup with a single server but when a more scalable solution is needed a few challenges tend to pop up. This article covers our experiences with Craft CMS.

#1: The conservative cloud solution

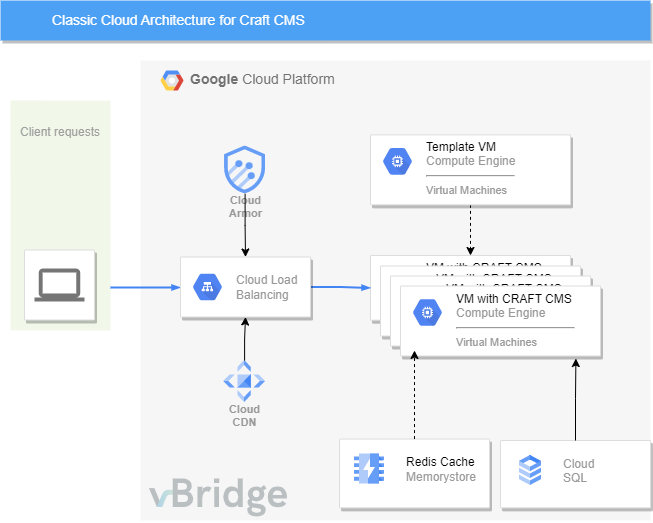

Last year we delivered a web infrastructure to a customer that endured peak loads of thousands of concurrent users. The application was built with Craft CMS. Because of tight deadlines and project constraints, we relied on rather classic cloud components to make this possible: autoscaling compute instances, Redis caching, http(s) load balancing and battle-tested automation.

The result was exactly what we aimed for. More than 95% of our requests were served well under 1 second, even during those moments of high load.

There are however a few challenges when running multiple compute nodes with Craft CMS behind a load balancer.

- Updating is difficult, because multiple layers of caching introduce inconsistencies

- Session handling

- Craft CMS holds a job queue that needs to be triggered for background and maintenance tasks

- Local assets are destroyed when autoscaling happens

- Building a performant CI/CD pipeline is tricky and cumbersome (it involves creating VM templates),

- A patching strategy is hard to implement

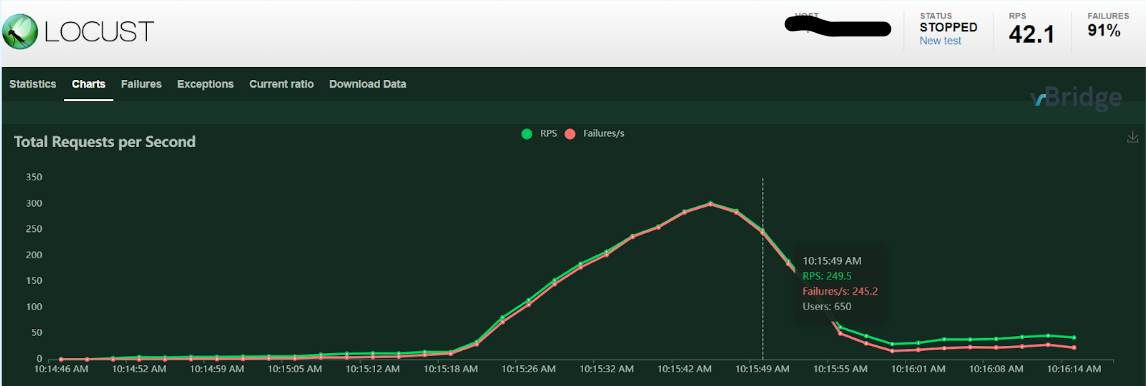

As shown in the graph below, scaling takes about a minute (during that time additional load is dropped!), so you always need active reserve capacity to cope with bursts of traffic. For that reason, when we knew the application was going to be mentioned on national news (the ultimate load test), we had to pre-warm instances and cache just to be sure.

The end result that you end up paying at least a minimum amount for cpu and memory, even when all is quiet on your application.

The resulting architecture:

#2: Going serverless

On our next Craft CMS project, we started from scratch with one question in mind: would it be possible to run Craft CMS serverless? After a quick search on the net, it seems not that many people tried to answer that question.

Challenge accepted, back to the drawing board!

The experience with Craft in the first project helped us a lot. We already knew some of the challenges we should counter:

- Create a blazing fast container: we don’t want users to wait for their first page (yes, we scale to 0!)

- Cope with the job queues

- Never store anything locally: containers are short lived

- Build a CI/CD pipeline

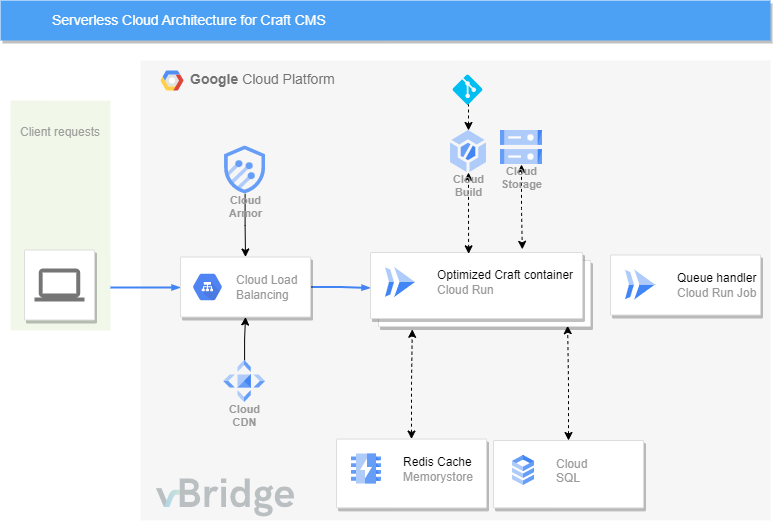

The technology central in this new architecture is GCP Cloud Run. It scales very fast and it integrates very well with other products within the GCP portfolio. (secret manager, load balancing, cloud armor, …) Although competitors (AWS app runner, Azure ACI) are trying hard to develop a similar product, this product still has an unmatched featureset.

To handle the task queues, we selected the brand new Cloud Run Job functionality, to simulate a cron-like behavior. The road to success was bumpy: Craft does have a few peculiarities to work around, but after tweaking, testing and optimizing, the result is simply awesome:

- The application scales very fast from 0 up to 1000 containers

- The container footprint is very minimal, reducing the need for patching

- The container is more lightweight than the vm, that means more pages per dollar.

- The CI/CD pipeline ticks all the boxes.

- The container is completely stateless: nu updates or management to care about, much smaller attack surface.

Another benchmark after all the changes:

The above test results says it all: our new stack scales almost perfectly. When we increase the load quite fast (0->350 requests per second within 3 minutes), almost no requests are dropped. Mission accomplished!